published by: | 15 May 2020 | news

The University of Konstanz has shared a press release on our international research project Living and Working in Times of the Coronavirus

To the press release (English)

To the press release (German)

Take part here: http://iscience.uni.kn/corona

published by: | 09 April 2020 | news

Under the direction of Prof. Dr. Ulf-Dietrich Reips from the University of Konstanz and in collaboration with an international research network, the iScience Group is carrying out a study in 110 countries. The survey deals with the effects of the new COVID-19 on life and work almost everywhere in the world. The initiator and coordinator of this unprecedented study is the WageIndicator Foundation from Amsterdam, whose website publishes critical results daily.

The coronavirus has changed and impaired the lives of many citizens since the first cases of infection in late 2019 and the health policy countermeasures. Far-reaching measures have not been without consequences in the world of work, they have a substantial impact on work processes, income and job security. The results of the new study will be used on the one hand for academic research purposes, and on the other hand, they are being made available to the general public in a visualized and meaningful form daily.

The study is primarily aimed at all working people, regardless of the type of employment. The questions revolve around life in general, the emotions triggered and the changing working conditions in times of the coronavirus.

With the help of this new study, the effects of the pandemic on experiences and behaviour can be grasped and made visible in a cross-country comparison. Demographic queries on age, place of residence and living situation allow for differentiated evaluations that can be used for future studies and recommendations for action.

The video for illustration can be viewed on YouTube (https://youtu.be/zw73tXd6VIA). The study is available at http://iscience.uni.kn/corona

Take part here: http://iscience.uni.kn/corona

published by: | 02 January 2020 | news

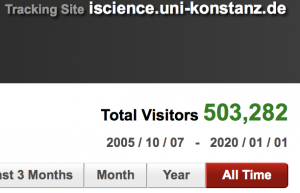

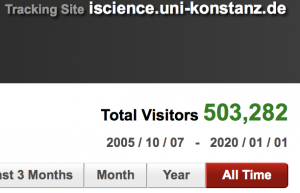

On January 1, 2020 our tracker reports 503’282 visitors to iScience web presence since October 2005. iScience just passed the half a million mark before the change to the new decade, and about 25 years after Ulf-Dietrich Reips (then a PhD student at University of Tübingen) set up the Web Experimental Psychology Lab, the first online laboratory for Psychology experiments.

published by: | 06 September 2019 | news

The talk took place on Wednesday, September, 11th, 2019, at 17:00 in A 703.

Everyone interested was welcome to attend and join a reception afterwards.